Trusted SuperPOD

The supercomputing world is evolving to fuel the next industrial revolution, which is driven by a new perception of how massive computing resources can be brought together to solve mission critical business problems. ATOS & NVIDIA are ushering in a new era in which enterprises can deploy world-record setting supercomputers using standardized components in weeks.

Designing and building scaled computing infrastructure for AI requires an understanding of the computing goals of AI researchers in order to build fast, capable, and cost-efficient systems. Developing infrastructure requirements can often be difficult because the needs of research are often an ever-moving target and AI models, due to their proprietary nature, often cannot be shared with vendors. Additionally, crafting robust benchmarks which represent the overall needs of an organization is a time-consuming process.

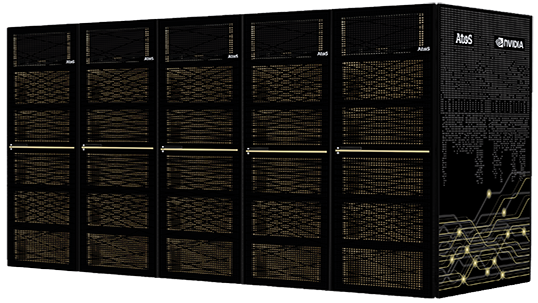

NVIDIA DGX™ SuperPOD

It takes more than just many GPU nodes to achieve optimal performance across a variety of model types. To build a flexible system capable of running a multitude of DL applications at scale, organizations need a well-balanced system, which at a minimum incorporates:

- Powerful GPU nodes with many GPUs, a large memory footprint, and fast connections between the GPUs for scale-up computing to support the variety of DL models in use.

- A low-latency, high-bandwidth, network interconnect designed with the capacity and topology to minimize bottlenecks.

- A storage hierarchy that can provide maximum performance for the various dataset structure needs.

The SuperPOD architecture has been designed to answer these requirements.

Solving the Challenge of Extreme Multi-Node AI Training

The NVIDIA DGX SuperPOD™ with NVIDIA DGX™ A100 systems is the next generation artificial intelligence (AI) supercomputing infrastructure, providing the computational power necessary to training today’s state-of-the-art deep learning (DL) models and to fuel innovation well into the future.

The DGX SuperPOD delivers groundbreaking performance, deploys in weeks as a fully integrated system, and is designed to solve the world’s most challenging computational problems.

NVIDIA DGX SuperPOD has demonstrated world-record-breaking performance and versatility in MLPerf 0.61, setting eight records in AI performance. These results offer proof of DGX SuperPOD’s ability to solve this scaling problem with an ultradense compute solution that taps into the innovative architecture of DGX system combined with high-performance networking from Mellanox and high-performance storage.

Atos AI SUITE – FastML Engine

Atos can bring on top of the SuperPOD solution a set of added value software including the Codex AI Suite – FastML Engine, a Data Science platform running at scale on top of a large scale AI infrastructure and abstracting the complexity of using a supercomputer for ML developers.

Our Machine Learning Engine features includes the management of standard AI artifacts such as Dataset, Frameworks, Experiments, the AI job submission on the SuperPOD, JupyterLab & Monitoring as-a-service for ease of AI development and an easy hyperparameter instrumentation to launch many jobs on the SuperPOD in one action, speeding AI exploration.

Our ML Engine is fully integrated with the NVIDIA DGX Software stack and is able to leverage the NVIDIA GPU Cloud (NGC) images and artifacts. The ML engine is also leveraging a hybrid orchestration layer able to interact with the SuperPOD job scheduler but also to deploy interactive session on dedicated service nodes ensuring a job production in parallel of code development session.

Securing the SuperPOD with Atos CyberSecurity products and services

Securing Machine Learning Operations is becoming key for AI research centers and industrial compagnies as cyberattacks get more sophisticated and more aggressive every day. Atos, the #1 in Europe and a global leader in cybersecurity can support you in deploying a Trusted SuperPOD, through its Cybersecurity Services (Consulting, Integration, Managed Security Services Provider) and its multi certified Cybersecurity Products.

Atos Cybersecurity indeed offers a full spectrum of around the clock and across the globe solutions covering Trusted Digital Identities, Digital Workplace Security, Hybrid Cloud Security, Industrial & IoT Security, Data Protection & Governance as well as Advanced Detection & Response.

Access a Global Team of AI Experts

SuperPOD is more than great hardware. It’s built on AI expertise that continually delivers higher performance, driven by thousands of researchers and engineers who use this platform to bring new innovations to market. This global team of AI experts use DGX SuperPOD every day and are ready to make your AI ambitions a reality. DGX SuperPOD simplifies the design, deployment, and operationalization of massive AI infrastructure with a validated design that’s offered as a turnkey solution through our value-added resellers. Now, every enterprise can scale AI to address their most important challenges with a proven approach that’s backed by 24×7 enterprise grade support.

NVIDIA DGX A100: The universal system for AI infrastructure

As the compute foundation of NVIDIA DGX SuperPOD, NVIDIA DGX™ A100 is the universal system for all AI workloads—from analytics to training to inference. DGX A100 sets a new bar for compute density, packing 5 petaFLOPS of AI performance into a 6U form factor.

Interested in NVIDIA DGX A100 or SuperPOD

DGX A100 also offers the unprecedented ability to deliver fine-grained allocation of computing power, using the Multi-Instance GPU capability in the NVIDIA A100 Tensor Core GPU, which enables administrators to assign resources that are right-sized for specific workloads. This ensures that the largest and most complex jobs are supported, along with the simplest and smallest.

Running the DGX software stack with optimized software from NGC, the combination of dense compute power and complete workload flexibility make DGX A100 an ideal choice for both single node deployments and large scale Slurm and Kubernetes clusters deployed.

Press releases

“As a long-standing partner, Nvidia welcomes the arrival of Atos ThinkAI, which takes a holistic approach to strategize our common client’s AI journey. In complementing NVIDIA DGX SuperPODTM, Atos ThinkAI combines the in-depth industry knowledge with data science proficiency to envision, architect and deploy the E2E secured AI-empowered supercomputing solution as we did in Linköping University at a much faster and more efficient pace. Together, we support any industry, any academic institution for accelerated time to innovation with actionable insight”

Carlo Ruiz

Head AI Data Center Solution for EMEA at NVIDIA